Scaling B12 Recommendations with a human-in-the-loop recommender system

At B12, we combine the creativity and talent of human experts with the power and scalability of artificial intelligence (AI) to provide our customers with beautiful websites that grow with their businesses.

We are always looking for new ways to leverage AI to automate parts of our process so that our experts can focus on doing what they do best: designing and optimizing websites. Our customers also benefit from these efficiency gains because we pass the cost savings to our customers with lower prices and plans. In this post, we will describe how we applied adaptive machine learning with human expertise to auto-suggest personalized recommendations for our customers. This system eases the burden on our human experts as they make multiple recommendations to every one of our customers each month.

We released B12 Recommendations in March to help our customers keep their websites fresh and up to date. Every month, a team of experts looks at every B12 website and recommends updates focused on achieving our customers’ goals. However, B12 has a rapidly growing customer base, and we’re excited to scale a finite amount of expert attention to a larger set of customers each month. In addition, as our library of recommendations grows more flexible and comprehensive, it is harder for experts to find the perfect recommendations for each customer.

How do we continue to make recommendations that are personalized and valuable? In the rest of this post, we’ll introduce the Multi-Armed Bandit framework we use for making recommendations, explain some of the math behind it, and close with some learnings on deploying it in practice.

Personalized recommendations for every website

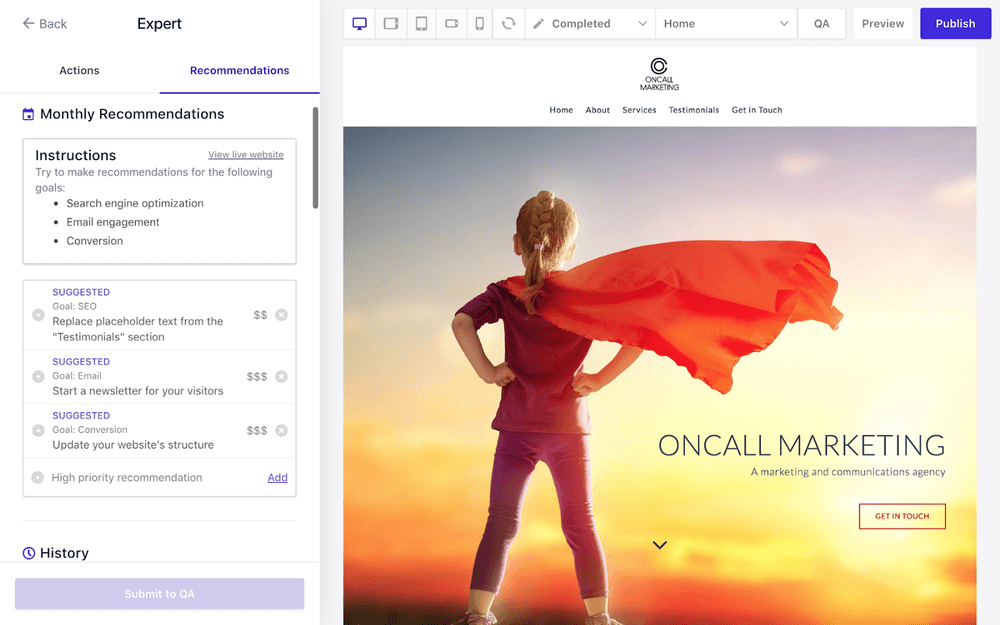

AI systems for auto-suggestion are all around you. Netflix recommends TV episodes for you to watch, Spotify curates a Discover Weekly playlist well-attuned to your musical tastes, and Tinder suggests romantic partners. The broad term for the software that powers such suggestions is “Recommender Systems,” and we leverage the same techniques to auto-suggest recommendations at B12. Rather than recommending directly to our customers, we first vet recommendations with our experts, allowing them to personalize the suggestions before they go live. They do so using the custom-built interface in Figure 1.

There are a number of commonly used approaches to building recommender systems. Collaborative filtering, perhaps the best known algorithm, uses data about the history of user choices to recommend new items, assuming that similar users will like similar items. This approach works well for companies like Netflix, which have huge datasets of hundreds of millions of user-show pairings, but it performs poorly without many millions of data points, as is the case with B12’s recommendation history.

Instead, we wanted an adaptive approach that takes advantage of available knowledge and also learns and improves with each new observed datapoint. For this reason, we chose to build our recommender system using the Multi-Armed Bandit (MAB) framework, which learns well from smaller datasets and adapts its model as it makes recommendations.

The MAB problem is usually described in the context of gambling. Suppose you are on the floor of a Vegas casino, and can sit down at any of the many slot machines in the room. Each of the slot machines (arms) has an unknown probability of making you money. For example, one machine might pay out one dollar 10% of the time, while another might pay out one million dollars 0.000001% of the time. The best slot machine to play is the one with the highest expected payout: if you played every machine forever, it’s the one you’d make the most money from. Unfortunately, you don’t have the time (or the quarters!) to play every machine forever in order to find the best one, and the difficulty is not just finding the best slot machine, but finding it efficiently without losing money as we go. To maximize our earnings and learnings in this environment, we have to find a balance between exploration (searching for the best slot machine) and exploitation (playing the best slot machine seen so far).

What does this have to do with B12 Recommendations? If you think of each recommendation as a slot machine, and each arm pull as an opportunity for a customer to purchase a recommendation (giving B12 a payout), the analogy is straightforward: we’re trying to figure out which recommendation is best to send to a given customer. We can actually do even better, because we can use contextual information about our customers to improve recommendations — similar customers with similar websites will probably want similar recommendations. This can be incorporated into the MAB framework via an extension known as contextual bandit, which takes into account additional information beyond just the arms you pull.

The nitty-gritty of the algorithm

Enough of the flowery exposition: it’s time to get down to brass tacks. If mathematics is not your, shall we say, cup of chrysanthemum, you might want to skip a couple sections. If mathematics is literally your favorite beverage, check out the full algorithm with associated proofs in the paper that developed it.

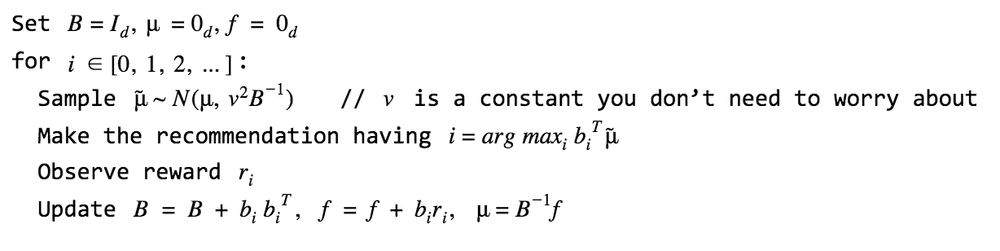

Let’s say there are a fixed number of facts about our customers, their websites, and specific recommendations that matter for predicting whether or not a customer will buy a recommendation. For example, we could use the number of pages on a customer’s website, or the number of times a particular recommendation was purchased in the past. We call these facts features, and the main goal of the algorithm is to train a model that predicts, given the vector of features from a given customer and a given recommendation, how likely the customer is to purchase the recommendation. In math, if d is the number of features, bᵢ ∈ ℝᵈ is the feature vector representing the iᵗʰ customer-recommendation pair, and rᵢ ∈ [0, 1] is the reward (payout) representing how much the customer spent on the recommendation, we’re trying to learn a model μ∈ℝᵈ, such that bᵢᵀμ ≈ rᵢ for all i. If we have that model, then our recommendation task is easy: for a given user, we make the recommendation with the largest expected reward (arg maxᵢ bᵢᵀμ).

This might look familiar to you: it’s the definition of linear regression. The difference is that linear regression gets to learn the model from a pre-existing dataset of customers and recommendations, whereas our contextual bandit algorithm must learn the model while simultaneously picking which recommendation to make to which customers.

How does it do that? The algorithm we use (due to its demonstrated empirical performance), Thompson sampling, takes a Bayesian approach, so instead of a single weight vector μ, our model is a multivariate Gaussian distribution with mean μ and covariance B⁻¹, where B is a matrix that captures how confident we are about the weights of each feature. Algorithm 1 lays out the steps of the algorithm. At each opportunity, the algorithm recommends to the customer the recommendation with feature vector bᵢ that it thinks is probabilistically the best bet at the present time, then uses the observed reward rᵢ to update B and μ. The update to B reflects that we have new information, good or bad: we’re more confident that we made a good recommendation if the customer purchased it, and we’re more confident that we made a bad recommendation if the customer didn’t purchase it. The update to μ brings the feature weights closer to the linear regression solution. Over time, B⁻¹ becomes small, causing recommendations to trust μ more, and μ provably converges to the optimal model.

And that’s it! Following this algorithm over time efficiently navigates the explore-exploit tradeoff, learning about new recommendations while taking advantage of known high-yield ones.

Theory, meet practice

“In theory, there is no difference between practice and theory. In practice, there is.”

The algorithm described above, though simple to write down, has limitations that make it hard to put into production. For example, it can be computationally expensive in high dimensions, since parameter updates require inverting a d ⨉ d matrix, and in practice we don’t immediately observe whether or not a customer purchases a recommendation (it can take days or even weeks!). Luckily, we’re not alone in facing these challenges, and we were able to adopt techniques from the literature to make the system usable in practice.

Feature extraction and dimensionality reduction. The contextual multi-armed bandits algorithm won’t be very useful if the information in the context vectors isn’t sufficient to predict whether a customer will purchase a given recommendation. However, if we add too many features, it will become slow to update the algorithm and make recommendations (which we do in real time as the expert loads the interface, so it needs to be snappy). We therefore opted for a tradeoff: extensive feature extraction to ensure that our context vectors were rich with features that the algorithm can use to discriminate between recommendation choices, and dimensionality reduction using principal component analysis (PCA) to ensure that the dimension of our context vector was of a controlled size. Our context vectors include a mix of customer-specific features and recommendation-specific features. Customer-specific features include age with B12, business category, customer goals, number of subscriptions and integrations, number of website pages and sections, and recommendation purchasing history. Recommendation-specific features include recommendation cost and the number of times a recommendation has previously been made and purchased.

Non-stationarity. As B12 grows and changes, the attributes of our typical customer evolve. Over time, typical customers might vary in their industry, the complexity and design of their websites, and their willingness to spend money on recommendations. This makes B12 Recommendations what is known as a nonstationary system. Our algorithm, however, is designed to get more and more confident about a single static model of recommendation quality, and will therefore get more and more out of touch with our changing customer-base. One common technique to address this (see section 6.3 of Russo et al.), is to simply forget old observations so that our model is always grounded in recent data, an approach which has the benefit of reducing data storage: no need to track old context vectors or rewards. To put this into practice, we modified Algorithm 1 with a windowed update scheme that simultaneously uses new observations while forgetting old ones.

Asynchronous rewards. Another problem we faced was how to deal with the fact that rewards are not received instantly (as in the casino scenario). Instead, we get new information when a customer purchases a recommendation, which might happen in minutes or in weeks. Implementation-wise, this wasn’t challenging: we scheduled a cron job to periodically monitor whether any new purchases have occurred, and update our model parameters when they have. However, the implications of this approach are important: the algorithm learns in batches, and doesn’t benefit from new information between the batches as it doesn’t know if its previous recommendation will be purchased when it makes the next one.

The cold start problem. In Algorithm 1, the model is initialized to a uniform distribution, meaning that initially, each recommendation has an equal chance of being selected. This reflects that we assume zero knowledge about our customers and recommendations, which is nice for the theory but means that in practice our first several hundred recommendations won’t be great. This is called the cold-start problem of recommender systems. Luckily, we can get around it by remembering that we already have information about which customers like which recommendations: the existing history of B12 Recommendations purchases. To take advantage of that knowledge, we ran the algorithm over our historical data in simulation, and initialized our model based on the resulting parameters. This ensured that our model encoded some prior information derived from our data even before we started making any recommendations, so that its very first recommendations were valuable.

Results from the field

Our recommender system went live at the beginning of August 2018, so we can’t give a full report on algorithm performance just yet. Fortunately, we set ourselves up for success by writing code to continually monitor the system on a weekly basis to see how it is performing on key metrics such as the percentage of customers who opt in to recommendations and the average amount of money customers spend on recommendations.

In addition, we have some encouraging qualitative results from our B12 experts. Since the algorithm is still learning, experts didn’t always find the auto-suggestions to be relevant. But when they were relevant, experts noted that the suggestions “dramatically speeded up the workflow” and “brought to mind improvements [they] wouldn’t necessarily consider.”

An unexpected benefit of the algorithm was an increase in the diversity of the recommendations we made to our customers. Quantitatively, auto-suggested recommendations were statistically significantly more likely to be unique than expert-suggested recommendations, and qualitatively, our QA team observed an increase in the diversity of experts’ recommendations.

Wrapping up

With a customer base quickly outpacing the number of human experts, we needed to invest in technology to scale our personalized website recommendation service. Our recommender system uses a contextual multi-armed bandit algorithm to adaptively learn about our customers and recommendations as it auto-suggests personalized recommendations. Over time, the recommendations will get better and better!

Getting a recommender system in place was a great first step, but there’s always room for improvement. In subsequent months, we plan on making some important improvements to the model. First, we’ll adapt the algorithm to learn not just from customer purchasing decisions, but also from expert behavior. Whether or not an expert chooses to keep a suggested recommendation is already a good signal of the suggestion’s quality, even if the customer doesn’t end up buying it. Secondly, we will use our domain knowledge to implement smarter constraints around the set of recommendations the algorithm can suggest. For example, we can leverage our knowledge about the structure of customer websites to avoid suggesting “add a testimonial page” to a customer who already has one.

This system is just one of the many products we are developing at B12 to automate the rote, repetitive parts of work and free up our experts to focus on the creative aspects of website design and growth that ultimately lowers costs for our customers. We can’t wait to share future ideas with you as we continue to create a brighter future of work!

Want to join us in shaping the future of work? Drop us a line and a résumé at hello@b12.io

Read next

See all

Trusted by 1M+ users: Our most personalized Website Generator yet

Build custom websites faster, add new pages in seconds, and manage multiple sites effortlessly

Read now

OpenAI features B12’s Website Generator in the GPT Store

Create and customize your website directly in ChatGPT using DALL-E

Read now

Lightning-fast website creation with B12's generative AI

Generate your tailored website in one click. To make edits, regenerate any section instantly.

Read now